A Closer Look at V* - Visual Search that Outperforms GPT-4V

- Introduction

- Explain Like I’m Five

- Comparison to GPT-4V

- See It In Action

- Is V* Really Better Than GPT-4V?

- Conclusion

Introduction

GPT-4V has been the best vision model until now, but a new era emerges with V* and the SEAL(Show, SEArch, and TelL) framework. V* offers a more accurate approach in processing details high-resolution images where GPT-4V will fail.

Here I link the project website and the paper:

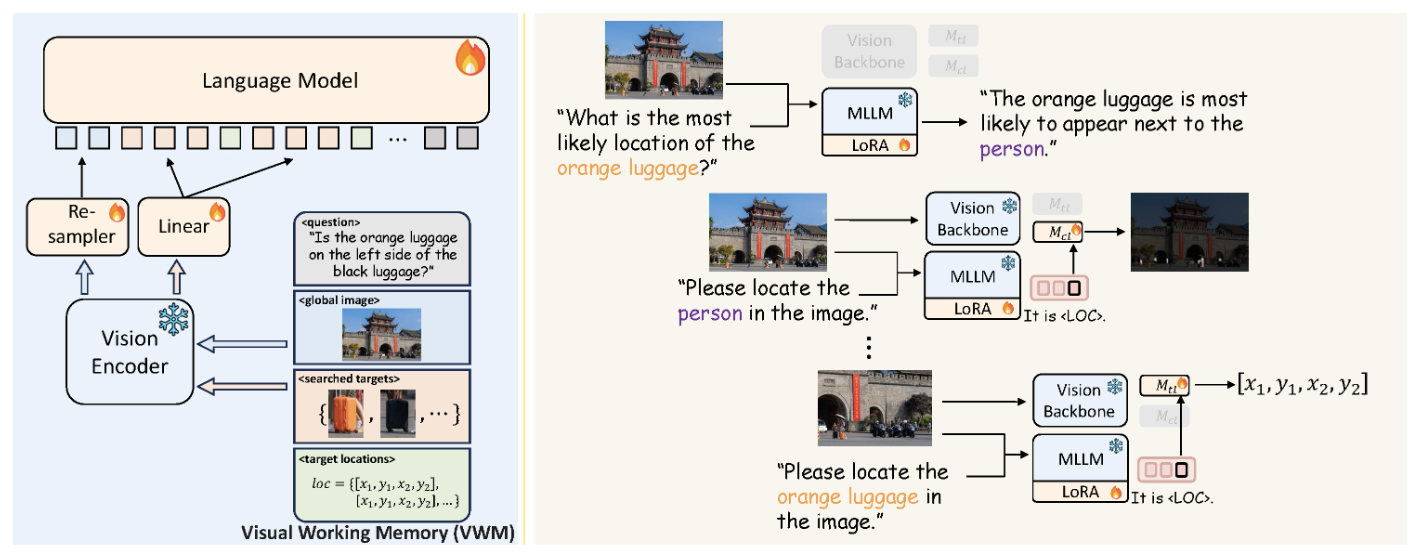

Explain Like I’m Five

I won’t delve deeply into the specifics of the framework and mathematics underpinning the model, as they can be found on the project’s website and in the accompanying paper. Instead, I aim to describe the methodology in simple terms, which is my preferred approach to learning.

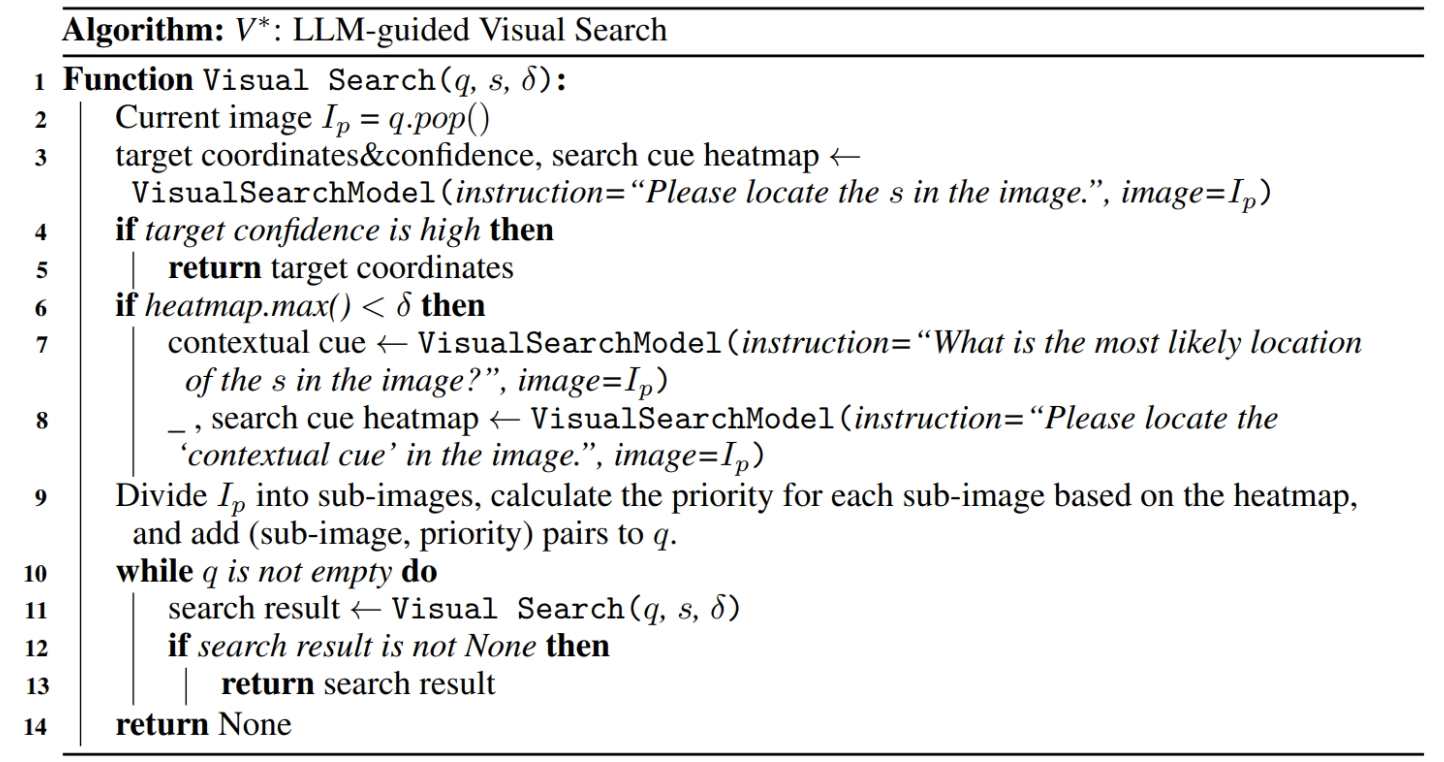

To put it simple, the V* search emulates the way humans undertake a visual search task:

- Initially, we identify the characteristics of the object we’re seeking, such as the color of a beverage.

- Next, we deduce the most probable location of the object; for instance, a beverage is likely found on a table.

- Subsequently, we first scout the general location, like the table.

- After locating the general area, we narrow our focus and scrutinize the surroundings for further details about the objects there, such as identifying the beverage and its color.

Given that Large Language Models (LLMs) possess the capability to comprehend the task and contextualize accordingly, we can direct an LLM to execute the aforementioned steps. This process is repeated until there is strong confidence that the target has been located. This, in essence, is V*: LLM-guided Visual Search.

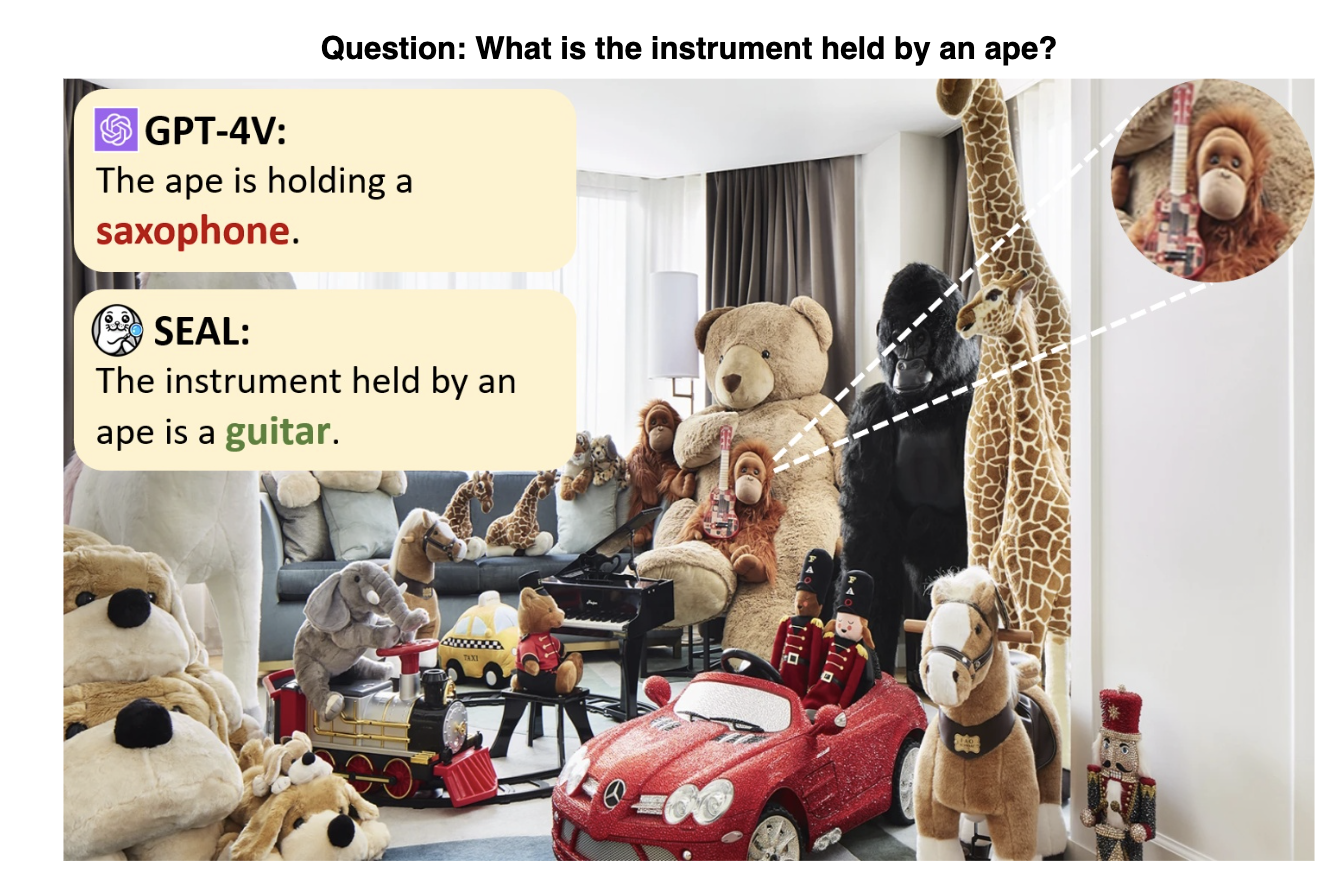

Comparison to GPT-4V

Despite GPT-4V’s advancements, it falters in analyzing high-resolution images and intricate visual tasks, often processing images as a whole, thus leading to inaccuracies. It lacks the nuanced, selective focus required for complex visual analysis, struggling with fine details and deep contextual understanding.

Conversely, SEAL V* excels by strategically identifying and focusing on specific image sections, iteratively refining its search. This targeted approach enables V* to surpass GPT-4V’s performance in detailed visual tasks.

See It In Action

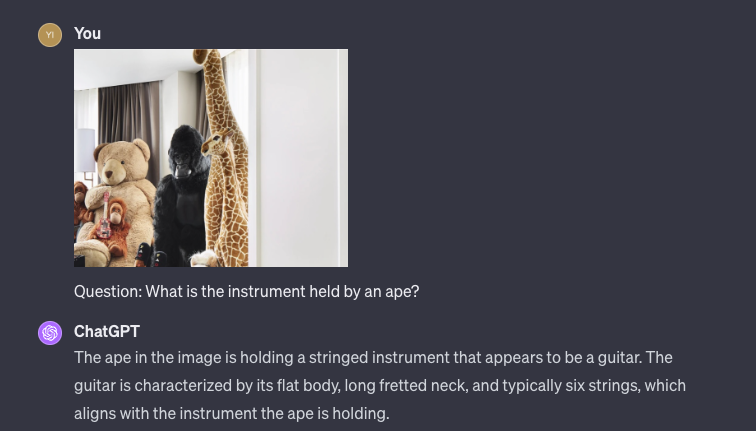

I’ll visualize how SEAL V* works step-by-step. Using the above ape guitar image to demonstrate:

- look at the whole image and question “What is the instrument held by an ape?”. Decision:

Need to conduct visual search to search for: instrument held by an ape.

- Divide the image into 4 sections, and order by target likelyhood, add them into the search queue. Search the image on top of the queue (with most likelyhood and likelyhood > threshold). Decision:

Search bottom left cornerand produce probability heatmap of the target.

The heatmap targets around the car, further divide bottom left image into smaller 4 sections, pick the section with high heat (car) and add to the queue for following search.

The heatmap targets around the car, further divide bottom left image into smaller 4 sections, pick the section with high heat (car) and add to the queue for following search. - Move back to the right bottom corner, and produce probability heatmap of the target. Again, there’s something on the heatmap, futher divide into 4 sections and add to search queue.

- Search the next item of the queue, back to the car image, probability wasn’t over threshold, move on.

- Search the next item of the queue,

apefound, return results.

Here is the output of the program. Note the parameters can all be adjusted:

- Confidence threshold, decides if continue search or confident enough to return result

- Image crop size, how small each corp can be, stop at a minimal size e.g. 255x255

- Image crop number, decides how many sections to divide an image by.

CPU times: user 42.5 s, sys: 2.77 s, total: 45.2 s

Wall time: 42.5 s

('Need to conduct visual search to search for: instrument held by an ape.',

'Targets located after search: instrument held by an ape.',

array([[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[255, 255, 255],

[255, 255, 255],

[255, 255, 255]]], dtype=uint8),

'The instrument held by an ape is a guitar.')

Is V* Really Better Than GPT-4V?

In my opinion, only in limited conditions.

This is because the cost of accuracy is high. This method of guided search reminds me of langchain and AutoGPT, where you ask LLMs to think step-by-step, and with each step, you repeatedly ask it to perform further actions. The results? As you can see above, the runtime is very long. This delay is due to calling the LLM multiple times to perform actions. In some cases, the steps can grow to over 20, and the runtime will be a few minutes!

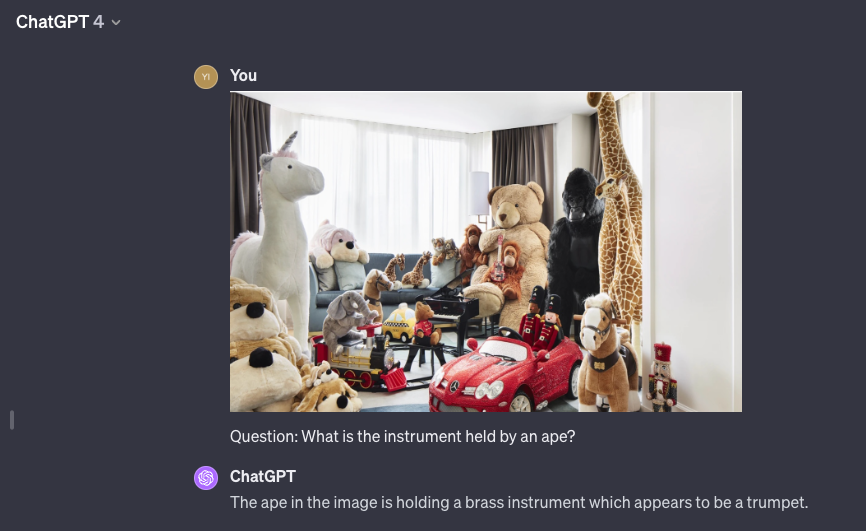

In theory, the underlying LLM, LLAVA-7B, is not as performant as GPT-4V. So, I conducted a similar experiment with GPT-4V, where I follow the SEAL framework and divide the image into smaller pieces and question it; here are the results:

Therefore, it’s evident that GPT-4V could yield more accurate results with detailed, cropped images. Moreover, substituting the LLM in V* with GPT-4V might further enhance the performance.

Conclusion

While it may make compelling headlines to claim that SEAL V* surpasses GPT-4V, this advantage comes at the high cost of performing repeated searches on smaller segments of each image.

One potential optimization could involve using an LLM to guide the search, combined with a CNN like YOLO for object detection. This could enhance efficiency, as relying solely on LLMs can be slow and costly. However, this approach requires a CNN trained for specific tasks, which limits its generalizability.

Nonetheless, the SEAL (Show, SEArch, and TelL) framework represents an innovative approach to visual search, mimicking human behavior. It demonstrates superior accuracy in conducting detailed searches in high-resolution images. This method is likely to pave the way for new visual search techniques, potentially leading to solutions that are both fast and precise.

Thank you for reading!